by Dr. Jane Fitzgerald | Jun 17, 2023 | Artificial Intelligence, Emerging Technology, General, Information Technology

What is Artificial Intelligence (AI)?

Artificial intelligence (AI) is a computer-controlled entity’s ability to perform cognitive tasks and respond flexibly to

Image Credit: Piqsels.com

its environment to increase the probability of achieving a specific goal. The program can learn from data, from experience, and can mimic actions similar to human beings. But it does not generally use biologically measurable methods.

AI describes the computer’s ability to execute tasks to accomplish the desired objectives. It also means that a computer can effectively reduce or even eliminate human activities.

Popular examples of chess, go, and dota intelligence demonstrates computers in certain areas and outperform human capabilities. Natural language processing (NLP) and machine learning algorithms are currently the most known fields for AI.

Types of AI

- Artificial General Intelligence (AGI)

- Artificial Narrow Intelligence (ANI)

- Artificial Super Intelligence (ASI)

What is Artificial General Intelligence (AGI)?

AGI is still a concept of theory, recognized as Ai with a human-level cognitive capacity in a broad range of fields, including language processing, image processing, computational functioning, reasoning, etc.

We’re quite a long way from AGI system development. The AGI program will need to involve thousands of tandem-working artificial narrow systems that interact with one another to mimic human thought. Also, in advanced computing systems and facilities such as Fujitsu’s K or IBM ‘s Watson, a single second of neural activity simulates within 40 minutes. It applies to immense complexity and interconnections between the human brain and the scale of the task of constructing an AGI with the current resources.

What is Artificial Narrow Intelligence (ANI)?

ANI is the most popular type of AI on the market now. Such AI systems are designed to solve a single problem and can perform one function very well. They have limited capabilities by design, such as recommending a product for e-commerce users or weather prediction. It is today’s only form of artificial intelligence. They can approach human functioning in particular situations and, even in many cases, surpass it. But only in highly controlled environments with a limited range of parameters.

What is Artificial Super Intelligence (ASI)?

Here we are almost into science fiction, but ASI alias as the logical development of AGI. An Artificial Super Intelligence system (ASI) could exceed all human capacities; this will involve decision-making, sound decisions, and even issues like improving the art and developing interpersonal ties.

When Artificial General Intelligence succeeds, AI systems could quickly boost their ability and push into fields that we could not foresee. While the distance between AGI and ASI is relatively narrow (some say just like a nanosecond because that is how quickly Artificial Intelligence will learn), the long path to AGI itself looks like a dream far into the future.

Image Credit: Wikimedia

What is the Purpose of AI?

Artificial Intelligence aims to aid human performance and help us make high-level decisions with far-reaching consequences. That’s the response from a technical standpoint. From a philosophical viewpoint, Artificial Intelligence can help humans lead more fulfilling lives free of hard labor and help manage the vast network of interconnected individuals, businesses, states, and nations to work in a manner that’s beneficial to all humanity.

Currently, Artificial Intelligence aims to simplify human effort and to help us make better choices by all the different tools and techniques that we have invented. Artificial intelligence, similarly marketed as our Last Innovation was a development that would create breakthrough technologies and services. It would also transform our way of life exponentially and eventually eradicate conflict, injustice, and human stress.

That’s all in the far future, though – we’re quite a long way from those kinds of outcomes. At present, the primary purpose of AI is to improve companies’ process efficiency, automate resource-intensive tasks, and produce business predictions based on hard data rather than good feelings. As with other technology, companies and government agencies must finance research and development expenses before they are available to laypeople every day.

The application of AI?

AI is used in different fields to provide insights into user behavior and to provide data-based recommendations. For example, the predictive search algorithm used by Google previous user data to predict what a user would type in the search bar next. Netflix uses past user data to decide what movie a user wants to see next, link the user to the app, and maximize watch time. Facebook uses previous users ‘ data to automatically suggest tagging your friends based on their facial characteristics in their images. AI is used in large organizations to simplify the life of an end-user. In general, the applications of artificial intelligence will come under the category of data processing, including:

- Data filtering and search optimization to give the most relevant results

- Logical chains for if-then reasoning serves to execute command strings based on parameters.

- Pattern detection to recognize essential trends for useful insights in large datasets.

- Applied probabilistic models to predict future results

Why does it matter?

The Internet-enabled global communication for all and impressively changed our way of working, living, and interacting. With process automation, AI is supposed to do the same; this will affect the consumer sphere, but it will also influence more repetitive business processes or simple decisions.

Some of the effects most discussed would be autonomous driving, but analysis, customer service, regulation, legal, and management are fields in which AI can improve productivity significantly. This cross-industry influence makes it critical that nearly everyone participates in AI.

Where are we today?

Even though AI has been around for over 50 years, we are still in the early stages. Factors such as processing power, worldwide networking, and cloud technology have just begun to open up artificial intelligence opportunities.

With the large enterprise data sets available – massive data hype, the three main phases of artificial intelligence segments into pattern recognition. The second phase currently involves commercializing deep learning algorithms, which enable real learning systems through neuronal networks. There are still a few years of abstract and reasoning intelligence, but its development is now beginning.

First experiments already were undertaken, but business applications currently focus on phase two-giving technology plenty of room for performance and adoption.

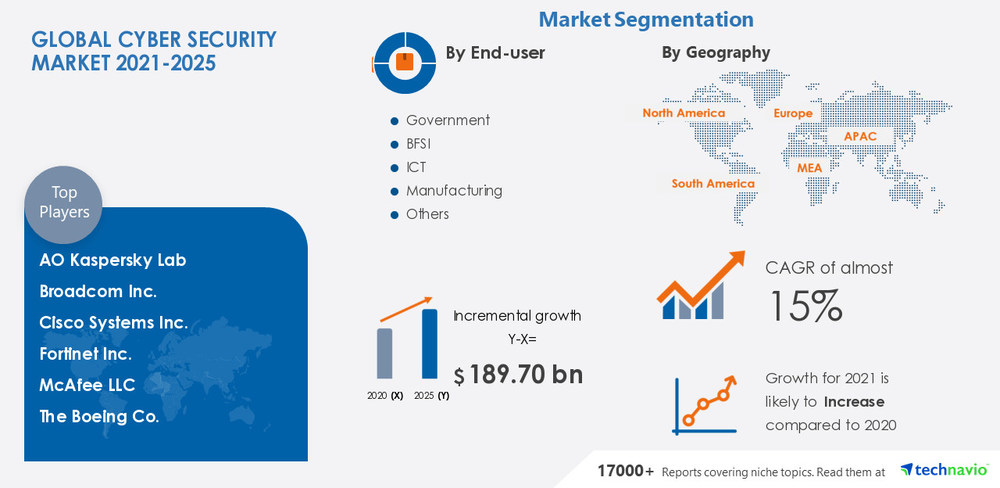

by Anika D | Nov 15, 2022 | Cybersecurity

With the advent of the digital revolution across industries, even governments now depend on computerized systems to manage their business activities. It makes their systems more vulnerable to cyber-attacks and phishing activities. It is where cybersecurity comes into the picture.

And just like other technologies, this industry has undergone tremendous evolution since its inception. Therefore, enterprises that aim to stay at the top should keep updating their current cybersecurity systems. This is what this blog post is about. Stay with us as we outline the top seven cybersecurity trends enterprises should look forward to in 2023.

Source

Artificial Intelligence

The combination of Artificial Intelligence and Machine Learning has revolutionized cybersecurity. We all know how adaptive AI can change the customer experience in various sectors.

Therefore, experts consider it paramount in developing automated security systems, face detection, natural language processing, and automatic threat detection. AI-powered threat detection processes can further help enterprises by predicting potential attacks. It can instantly notify the admins of any data breach.

Automotive Hacking

Modern vehicles are packed with advanced software and technological features that create uninterrupted connectivity for drivers. It is widely used in engine timing, cruise control, airbags, door locks, and delivery assistance systems.

The usage of Wi-Fi and Bluetooth makes these vehicles vulnerable to hacking. Developers are looking forward to gaining more control over such activities by developing anti-automotive hacking systems.

Work From Home Cybersecurity

Pre-pandemic, everything enterprise-related systems were present under one roof. It made their security management quite easy. All the procedures were regularly updated and were safe from malware and spyware. However, the work-from-home culture has wreaked havoc on organisations’ sensitive data post-pandemic.

Studies have also shown that the employees indirectly or directly made over 34% of the total attacks. Therefore, enterprises expect developers to create a robust security net in 2023. It will cover all the direct and indirect systems that connect with the main server at a point in time.

Developing a Security-Aware Culture

It is high time employees acknowledge that cybersecurity is not alone an IT issue. Every professional today must have a hold on the basics of cybersecurity. It will help him safeguard the sensitive company information saved on his devices.

So, in 2023, companies want to create a more security-aware culture. Besides this, businesses are also planning to train their workforce about the best practices to avoid email security compromise.

Supply Chain Attacks

Do you know that over 62% of global companies faced a supply chain attack in 2021? Hackers enter company networks through compromised third-party devices. With more advanced and robust techniques and tools, combating these attackers has become a challenge for companies.

Therefore, it is vital that businesses adopt more proactive approaches to analyze and observe user behavior to identify suspicious access and patterns.

Bottom Line

So, these are the top five cybersecurity trends that enterprises should look out for in the next year. It is high time; businesses get geared up to combat the high-tech hackers. They can also hire market experts to provide a better security blanket to their systems.

by Anika D | Sep 20, 2022 | Information Technology

In recent years, technological advancements have changed the medical field. The days of manually filing the records are decreasing. With emerging technologies in electronic recordkeeping and telehealth, patients can easily access their data. They also improve efficiency and effective communication in the nursing field. Let us know more about how emerging technologies are impacting nursing practices.

Benefits of Nursing Technology

The advancements in technology and latest devices have enhanced the quality of life for healthcare professionals and patients in the following ways:

Better Accessibility

Electronic Health Records (EHRs) have changed the healthcare space entirely. EHR is a digital version of patients’ medical history, including progress notes, problems, providers, lab data, medications, etc. EHRs can improve patient care by enhancing the accuracy of medical records and making easy data accessible to doctors and patients.

In addition, advancements in telehealth have played a large part in better accessibility. Telecommunication systems make it easy for patients separated geographically to get nursing care through live video conferencing, remote patient monitoring, and mobile health apps. So, you can easily access quality care even in remote geographic areas these days.

Decreased Human Error

Source

Emerging technologies can lessen the chances of human errors. For example, nurses working long hours are at higher risk of committing mistakes. In addition, with the latest medical technologies, routine procedures have become simple. For instance, smart beds can help nurses to check weight and track movement and vitals. Or automated IV pumps can measure medication dosage for patients. Moreover, EHRs can reduce bedside mistakes as data is readily available.

Positive Impact on Nursing Shortage

Nurse burnout is an important factor in the shortage of nurses. Prolonged physical and mental exhaustion makes nurses feel stretched thin, leading them to leave their practice setting. Emerging technologies in telehealth can lighten the nurse’s burden since it takes fewer nurses to offer adequate care.

Source

In addition, telehealth allows nurses to reach geographical areas with a shortage of health professionals. It includes locations in rural regions that lack healthcare professionals. Telehealth helps address these issues by remotely providing effective care to patients.

Potential Drawbacks of Nursing Technology

The rapid advancement of technology in healthcare has identified potential obstacles that many hospital systems face. Some of them are as follows:

Threat to Humans

Recent technology can threaten to replace direct interaction between patient and nurse. Nurses play the role of making a relationship with patients and their families. They are also responsible for explaining medications and taking vitals. In some hospitals, nurses record the patient’s information. Though typing information on a computer seems more trustworthy to healthcare professionals and patients, it will inevitably create less person-to-person interaction.

Data and Security Threats

EHRs kept in the form of big data or the cloud is susceptible to being hacked. Hospital cyber crimes are common as patient details can be sold on the black market. Also, the entire EHR system can be at risk if an employee accidentally taps on malware. Hospitals with a data breach can pay hefty fines based on the severity.

Final Words

The emerging technology in nursing is a future wave in the healthcare industry. Advanced technologies in AI, EHRs, software, and app development are becoming popular as more hospitals integrate them into the health system. Though there are some drawbacks, it is clear that information technology has great potential to improve the quality of life.

by Anika D | Aug 16, 2022 | Industrial IoT, Information Technology

Before making the Internet of Things (IoT) strategy, you must know exactly what it is. The answer is pretty simple: IoT is the network of physically existing devices, objects, automobiles, etc. They consist of sensors and software chips that can collect or exchange data. In addition, IoT enables objects to control or sense the existing network’s infrastructure remotely. Here are some steps to jump-start the successful IoT journey:

Make a Business Case

Initially, decide the objectives and state the value proposition. Then, look into instances where you can create opportunities by connecting the objects. Also, determine how the machine data controlled by different sources can shrink operating charges, improve efficiency and make judgments for timely decisions.

Select the Right IoT Platform

Be careful about the risks of complexity that come with running multiple systems. Experts recommend a partnership with an IoT platform vendor that offers a unified framework for all IoT requirements. Many software vendors have invaded the market recently, showing IoT products. But few provide complete services and products.

Without a complete platform, you must depend on different partners for development. As a result, it will increase the complexity and system integration costs.

Build and Test

The IoT value chain is about integration, from edge devices to sensors, enterprise systems to gateways, and beyond. A major challenge to the promise of IoT applications is that a system must integrate all the IoT value chain components. But unlocking the potential of numerous intelligent things is difficult because of required communication standards.

Building IoT applications require a lot of time and resources. An IoT architecture with various APIs will simplify the interoperability task among heterogeneous objects.

Deploy – Internet of Things

The main asset of Internet of Things applications is sensor data. When the sensors generate a huge amount of data, inspecting and obtaining real-time insights is a major challenge. Therefore, prepare the business to control that magnitude, and data variety as the number of devices might scale.

From the functional point of view, complex algorithms need to transform the sheer data volume into business intelligence. It is important to have a powerful analytical tool to unlock the data value for business decisions.

Manage and Maintain

Managing remote assets is not so difficult when you ingrain big data, mobility, and cloud in your IoT architecture. Mobile applications complement IoT as you can control assets with a single move by receiving notifications and alerts in case of deviation in connected devices. For instance, a facilities manager for a mobile tower site may receive real-time information on the fuel status of the generator.

Similarly, a smartphone with in-built sensors acts as a connectivity hub with the ability to connect with the environment and paves the path for myriad applications. Clearly, IoT solutions are more valuable when mobile apps accompany them.

Final Thoughts

The future of the Internet of Things will be full of new inventions. According to experts, there will be around 20 to 30 billion devices connected to the internet by the end of 2022. This figure has kept on increasing in the last few years.

by Anika D | Aug 5, 2022 | Cybersecurity, Emerging Technology, Information Technology

Emails are part of every business in today’s world, but it is also one of the biggest security risks. BEC (Business Email Compromise) is rising every year. In addition, it is becoming common for criminals to use different phishing and spoofing attacks to probe organizations. In recent years, Ransomware has been growing rapidly and poses a greater risk to companies. What you don’t know is that email is a common entry place for Ransomware.

Source

But no need to worry about this. There are some countermeasures to this issue. These email security practices can go a long way for organizations from several threats. Here are some best practices to avoid Business Email Compromise:

Use Strong Passwords

It would be best to use strong passwords that meet the following criteria:

- It must complete a minimum length requirement.

- It must contain letters and numbers.

- It must contain both lowercase and uppercase letters.

- It may not have full name data.

- It should not be a commonly guessed password like 123456 or abcdef.

Use 2FA (Two-Factor Authentication)

Source

Many people think that only professionals can do 2FA, but it is not a difficult task. It is more like adding another shield to the account. Thankfully, almost every platform offers 2FA, but you can use another email provider if your system doesn’t support it. Also, if a hacker guesses your password, 2FA will stop them from entering before they get a sneak peek of your emails. Usually, two-factor authentication codes are sent to you by email, SMS, voice calls, or OTPs.

Be Careful of Suspicious Attachments

Nowadays, file sharing in the workplace mostly happens via collaboration tools like OneDrive, Dropbox, or Sharepoint. Therefore you should treat every file attachment in the mail suspiciously, even if it is from your colleague. Be more careful about the attached file with unfamiliar extensions or one that commonly delivers malware payloads (.zip, .exe, .scr, etc.).

Never Access Your Emails from Public Wi-Fi

If you take the office devices home or open your work emails from personal devices, do not access emails on public Wi-Fi. It is because cybercriminals can easily discover data passing through publicly accessible Wi-Fi. So, your sensitive data and login credentials both are at risk. Accessing emails is best when you are confident about network security. Opt for a safer option using mobile data or internet dongles outside the office.

Change Password Regularly

Many people keep passwords for years. But it is one of the biggest cybersecurity mistakes. The simplest yet most effective email security practice is regularly changing passwords. You must ensure the following things:

- Have a new email password after every 2 to 4 months.

- Use devices or do it yourself instead of leaving it to other people to update credentials.

- Do not add one of two characters to the current password when creating a new one. Change the entire password.

- Do not use passwords that you already used in the past.

Wrapping Up

BEC is a criminal phenomenon that has potentially severe consequences. BEC criminals usually live outside the country, so it is difficult for law enforcement to prosecute them. Therefore, prevention and detection are crucial. So, all companies should educate their employees and create an environment encouraging compliance.

by Anika D | Aug 2, 2022 | Artificial Intelligence, Information Technology

Have you noticed that your various accounts require multiple identity verification methods when you log in these days? You can no longer access your email, accounting system, or cloud applications by only entering your username and password. Instead, you need to enter a short code you receive on your phone or via email. Sometimes, you can also get verification calls using biometric data or a smart card.

Do you know what this is? It is multi-factor authentication (MFA). It consists of three things that you combine for identity verification. You can summarize MFA as “something you are, something you have, and something you know.” For instance, a combination of tokens, a username, password, or biometrics.

Source

Setting multiple verification methods may seem like a hassle, but it makes your accounts secure. Below are some reasons to enable MFA:

Secure Incase Passwords Get Stolen

These days password theft is evolving. Cybercriminals’ main methods to steal passwords are keylogging, phishing, and pharming. Keylogging involves secretly recording keys that strike on a keyboard. Phishing consists of fraudulent calls, emails, or SMS that ask for your sensitive information. Pharming includes installing malicious code on the device that redirects users to fraudulent sites.

Any individual or enterprise can easily become a victim of these attacks. But MFA ensures that your identity and accounts remain secure even if your password gets leaked.

MFA Adapts with Change in the Workplace

These days employees work outside the companies. So, with change in the workplace, you need more advanced MFA solutions that manage complex access requests. MFA offers multiple protection layers and evaluates potential risks while looking at the user’s location and device.

For instance, if you log in from your office, you will not get a prompt message for additional security factors. But if you log in from a café using your mobile, you will receive an SMS for verification of another factor.

Reduce the Usage of Unmanaged Devices

These days companies have embraced the remote working concept. So, employees often use personal devices or less secure internet connections. A hacker can easily install password-stealing malware on the device due to a compromised router. When you use MFA, you don’t have to worry about the security of unmanaged devices.

Improve Employee Productivity and Flexibility

Remembering so many passwords can be a huge burden. Therefore, many people use simple code, but that is easy to crack. To avoid this, many companies enforce password policies encouraging employees to set stronger passwords and update them regularly. But a new issue of forgetting the password arises with it.

Forgetting the password means password resetting, and it costs more time. In such cases, MFA allows you to sign in via a single-use code generated by an app or a fingerprint scan.

Stay Compliant

If your company deals with personally identifiable information or finances, state or federal laws may need an MFA security. MFA ensures access to protected health information by only authorized users. So, it allows your firm to remain compliant with HIPAA (the Health Insurance Portability and Accountability Act).

To Sum Up

Multi-Factor Authentication methods are quite inexpensive and easy to deploy. In addition, they provide a simple, effective solution to users and a wider business network. So, ask yourself why you haven’t already adopted this cybersecurity plan and go for it as soon as possible.